Spring 2021 projects

Templates

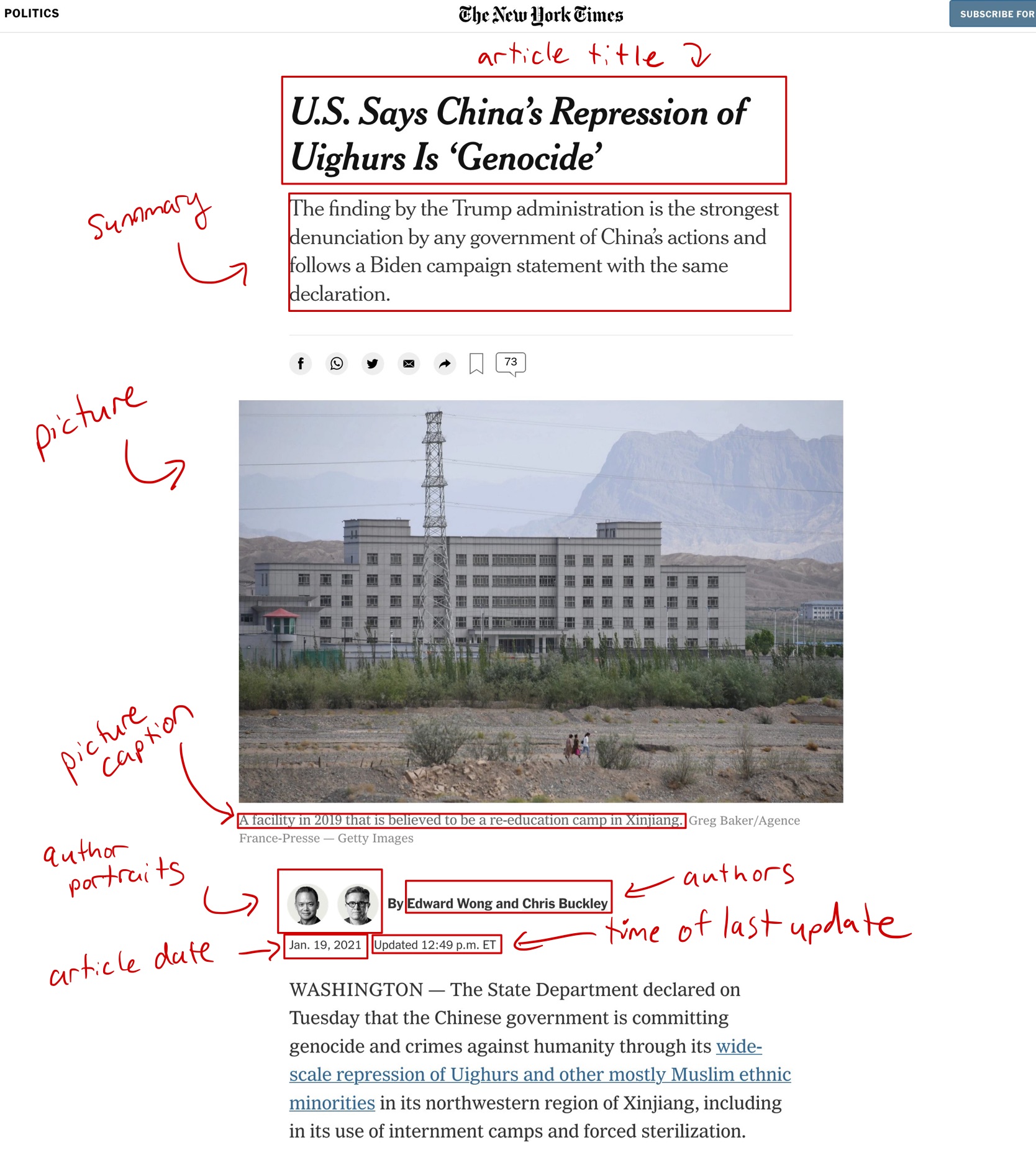

Our course project template can be found here, or on Scholar:

/class/datamine/apps/templates/project_template.Rmd

Important note: We've updated the template to allow a code chunk option that prevents content from running off the page. Simply add linewidth=80 to any code chunk that creates output that runs off the page.

This video demonstrates:

- opening a browser (emphasizing Firefox as the best choice),

- opening RStudio Server Pro (https://rstudio.scholar.rcac.purdue.edu),

- introducing (basics) about what RStudio looks like,

- checking to see that the students are using R 4.0,

- running the initial (one-time) setup script,

- opening the project template,

- knitting the template into a PDF file, and

- finally handling the popup blocker, which can potentially block the PDF.

Students in STAT 19000, 29000, and 39000 are to use this as a template for all project submissions. The template includes a code chunk that "activates" our Python environment, and adjusts some default settings. In addition, it provides examples on how to include solutions for Python, R, Bash, and SQL. Every question should be clearly marked with a third-level header (using 3 #s) followed by Question 1, Question 2, etc. Sections for solutions should be added or removed, based on the number of questions in the given project. All code chunks are to be run and solutions displayed for the compiled PDF submission.

Any format or template related questions should be asked in Piazza.

Submissions

Unless otherwise specified, all projects will need 2-4 submitted files:

- A compiled PDF file (built using the template), with all code and output.

- The .Rmd file (based off of the template), used to Knit the final PDF.

- If it is a project containing R code, a .R file containing all of the R code with comments explaining what the code does. Note: This is not an .Rmd file.

- If it is a project containing Python code, a .py file containing all of the Python code.

See here to learn how to transfer files to and from Scholar.

STAT 19000

Topics

The following table roughly highlights the topics and projects for the semester. This is slightly adjusted throughout the semester as student performance and feedback is taken into consideration.

| Language | Project # | Name | Topics |

|---|---|---|---|

| Python | 1 | Intro to Python: part I | declaring variables, printing, running cells, exporting to different formats, etc. |

| Python | 2 | Intro to Python: part II | lists, tuples, if statements, opening files, pandas, matplotlib, etc. |

| Python | 3 | Intro to Python: part III | sets, dicts, pandas, matplotlib, lists, tuples, etc. |

| Python | 4 | Control flow in Python | if statements, for loops, dicts, lists, matplotlib, etc. |

| Python | 5 | Scientific computing/Data wrangling: part I | timing, I/O, indexing in pandas, pandas functions, matplotlib, etc. |

| Python | 6 | Functions: part I | writing functions, docstrings, pandas, etc. |

| Python | 7 | Functions: part II | writing functions, docstrings, pandas, etc. |

| Python | 8 | Scientific computing/Data wrangling: part II | building a recommendation system |

| Python | 9 | Scientific computing/Data wrangling: part III | building a recommendation system, continued... |

| Python | 10 | Packages | Learn more about Python packaging, importing, etc. |

| Python | 11 | Python Classes: part I | writing classes in Python to build a game, dunder methods, attributes, methods, etc. |

| Python | 12 | Python Classes: part II | writing classes in Python to build a game, dunder methods, attributes, methods, etc., continued... |

| Python | 13 | Data wrangling & matplotlib: part I | more pandas, more matplotlib, wrangling with increased difficulty, etc. |

| Python | 14 | Data wrangling & matplotlib: part II | more pandas, more matplotlib, wrangling with increased difficulty, etc. |

Project 1

Motivation: In this course we require the majority of project submissions to include a compiled PDF, a .Rmd file based off of our template, and a code file (a .R file if the project is in R, a .py file if the project is in Python). Although RStudio makes it easy to work with both Python and R, there are occasions where working out a Python problem in a Jupyter Notebook could be convenient. For that reason, we will introduce Jupyter Notebook in this project.

Context: This is the first in a series of projects that will introduce Python and its tooling to students.

Scope: jupyter notebooks, rstudio, python

Learning objectives:

- Use Jupyter Notebook to run Python code and create Markdown text.

- Use RStudio to run Python code and compile your final PDF.

- Gain exposure to Python control flow and reading external data.

Make sure to read about, and use the template found here, and the important information about projects submissions here.

Dataset

The following questions will use the dataset found in Scholar:

/class/datamine/data/open_food_facts/openfoodfacts.tsv

Questions

Solution

- .ipynb

- .py

- .html

- .md

- .rst

- .tex

2. Each "box" in a Jupyter Notebook is called a cell. There are two primary types of cells: code, and markdown. By default, a cell will be a code cell. Place the following Python code inside the first cell, and run the cell. What is the output?

from thedatamine import hello_datamine

hello_datamine()Hint: You can run the code in the currently selected cell by using the GUI (the buttons), as well as by pressing Ctrl+Return/Enter.

Item(s) to submit:

- Output from running the provided code.

Solution

"Hello student! Welcome to The Data Mine!"

3. Jupyter Notebooks allow you to easily pull up documentation, similar to ?function in R. To do so, use the help function, like this: help(my_function). What is the output from running the help function on hello_datamine? Can you modify the code from question (2) to print a customized message? Create a new markdown cell and explain what you did to the code from question (2) to make the message customized.

Important note: Some Jupyter-only methods to do this are:

- Click on the function of interest and type

Shift+TaborShift+Tab+Tab. - Run

function?, for example,print?.

Important note: You can also see the source code of a function in a Jupyter Notebook by typing function??, for example, print??.

Item(s) to submit:

-

Output from running the

helpfunction onhello_datamine. - Modified code from question (2) that prints a customized message.

Solution

help(hello_datamine)Help on function hello_datamine in module thedatamine.core:

hello_datamine(name: str = 'student') -> None Prints a hello message to a Data Mine student.

Args:

str (name, optional): The name of a student. Defaults to 'student'.hello_datamine("Kevin")4. At this point in time, you've now got the basics of running Python code in Jupyter Notebooks. There is really not a whole lot more to it. For this class, however, we will continue to create RMarkdown documents in addition to the compiled PDFs. You are welcome to use Jupyter Notebooks for personal projects or for testing things out, however, we will still require an RMarkdown file (.Rmd), PDF (generated from the RMarkdown file), and .py file (containing your python code). For example, please move your solutions from Questions 1, 2, 3 from Jupyter Notebooks over to RMarkdown (we discuss RMarkdown below). Let's learn how to run Python code chunks in RMarkdown.

Sign in to https://rstudio.scholar.rcac.purdue.edu (with BoilerKey). Projects in The Data Mine should all be submitted using our template found here or on Scholar (/class/datamine/apps/templates/project_template.Rmd).

Open the project template and save it into your home directory, in a new RMarkdown file named project01.Rmd. Prior to running any Python code, run datamine_py() in the R console, just like you did at the beginning of every project from the first semester.

Code chunks are parts of the RMarkdown file that contains code. You can identify what type of code a code chunk contains by looking at the engine in the curly braces "{" and "}". As you can see, it is possible to mix and match different languages just by changing the engine. Move the solutions for questions 1-3 to your project01.Rmd. Make sure to place all Python code in python code chunks. Run the python code chunks to ensure you get the same results as you got when running the Python code in a Jupyter Notebook.

Important note: Make sure to run datamine_py() in the R console prior to attempting to run any Python code.

Hint: The end result of the project01.Rmd should look similar to this.

Item(s) to submit:

-

project01.Rmdwith the solutions from questions 1-3 (including any Python code inpythoncode chunks).

Solution

Done.

5. It is not a Data Mine project without data! Here are some examples of reading in data line by line using the csv package. How many columns are in the following dataset: /class/datamine/data/open_food_facts/openfoodfacts.tsv? Print the first row, the number of columns, and then exit the loop after the first iteration using the break keyword.

Hint: You can get the number of elements in a list by using the len method. For example: len(my_list).

Hint: You can use the break keyword to exit a loop. As soon as break is executed, the loop is exited and the code immediately following the loop is run.

for my_row in my_csv_reader:

print(my_row)

break

print("Exited loop as soon as 'break' was run.")Hint: '\t' represents a tab in Python.

Important note: If you get a Dtype warning, feel free to just ignore it.

Relevant topics: for loops, break, print

Item(s) to submit:

- Python code used to solve this problem.

- The first row printed, and the number of columns printed.

Solution

import csv

with open('/class/datamine/data/open_food_facts/openfoodfacts.tsv') as my_file:

my_reader = csv.reader(my_file, delimiter='\t')

for row in my_reader:

print(row)

print(len(row))

break # prematurely leave the loop6 (optional). Unlike in R, where many of the tools you need are built-in (read.csv, data.frames, etc.), in Python, you will need to rely on packages like numpy and pandas to do the bulk of your data science work.

In R it would be really easy to find the mean of the 151st column, caffeine_100g:

myDF <- read.csv("/class/datamine/data/open_food_facts/openfoodfacts.tsv", sep="\t", quote="")

mean(myDF$caffeine_100g, na.rm=T) # 2.075503If you were to try to modify our loop from question (5) to do the same thing, you will run into a myriad of issues, just to try and get the mean of a column. Luckily, it is easy to do using pandas:

import pandas as pd

myDF = pd.read_csv("/class/datamine/data/open_food_facts/openfoodfacts.tsv", sep="\t")

myDF["caffeine_100g"].mean() # 2.0755028571428573Take a look at some of the methods you can perform using pandas here. Perform an interesting calculation in R, and replicate your work using pandas. Which did you prefer, Python or R?

Item(s) to submit:

- R code used to solve the problem.

- Python code used to solve the problem.

Solution

# could be anything.Project 2

Motivation: In Python it is very important to understand some of the data types in a little bit more depth than you would in R. Many of the data types in Python will seem very familiar. A character in R is similar to a str in Python. An integer in R is an int in Python. A numeric in R is similar to a float in Python. A logical in R is similar to a bool in Python. In addition to all of that, there are some very popular classes that packages like numpy and pandas introduces. On the other hand, there are some data types in Python like tuples, lists, sets, and dicts that diverge from R a little bit more. It is integral to understand some basic concepts before jumping too far into everything.

Context: This is the second project introducing some basic data types, and demonstrating some familiar control flow concepts, all while digging right into a dataset.

Scope: tuples, lists, if statements, opening files

Learning objectives:

- List the differences between lists & tuples and when to use each.

- Gain familiarity with string methods, list methods, and tuple methods.

- Demonstrate the ability to read and write data of various formats using various packages.

Make sure to read about, and use the template found here, and the important information about projects submissions here.

Dataset

The following questions will use the dataset found in Scholar:

/class/datamine/data/craigslist/vehicles.csv

Questions

1. Read in the dataset /class/datamine/data/craigslist/vehicles.csv into a pandas DataFrame called myDF. pandas is an integral tool for various data science tasks in Python. You can read a quick intro here. We will be slowly introducing bits and pieces of this package throughout the semester. Similarly, we will try to introduce byte-sized (ha!) portions of plotting packages to slowly build up your skills.

How big is the dataset (in Mb or Gb)?

Important note: If you didn't do optional question 6 in project 1, we would recommend taking a look.

Hint: Remember to check out a question's relevant topics. We try very hard to link you to content and examples that will get you up and running as quickly as possible.

Relevant topics: pandas read_csv, get filesize in Python

Item(s) to submit:

- Python code used to solve the problem.

Solution

import pandas as pd

from pathlib import Path

myDF = pd.read_csv("/class/datamine/data/craigslist/vehicles.csv")

p = Path("/class/datamine/data/craigslist/vehicles.csv")

size_in_mb = p.stat().st_size/1000

print(size_in_mb)2. In question (1) we read in our data into a pandas DataFrame. Use one of the pandas DataFrame attributes to get the number of columns and rows of our dataset. How many columns and rows are there? Use f-strings to print a message, for example:

There are 123 columns in the DataFrame!

There are 321 rows in the DataFrame!In project 1, we learned how to read a csv file in, line-by-line, and print values. Use the csv package to print just the first row, which should contain the names of the columns, OR instead of using the csv package, use one of the pandas attributes from myDF (to print the column names).

Relevant topics: csv read csv, pandas DataFrame, f-strings, break

Item(s) to submit:

- The output from printing the f-strings.

- Python code used to solve the problem.

Solution

print(f'There are {myDF.shape[1]} columns in the DataFrame!')

print(f'There are {myDF.shape[0]} columns in the DataFrame!')import csv

our_row = []

with open("/class/datamine/data/craigslist/vehicles.csv") as my_file:

my_reader = csv.reader(my_file)

for row in my_reader:

our_row = row

break

print(our_row)

print(myDF.columns.to_list())3. Use the csv or pandas package to get a list called our_columns that contains the column names. Add a string, "extra", to the end of our_columns. Print the second value in the list. Without using a loop, print the 1st, 3rd, 5th, etc. elements of the list. Print the last four elements of the list ( "state", "lat", "long", and "extra") by accessing their negative index.

"extra" doesn't belong in our list, you can easily remove this value from our list by doing the following:

our_columns.pop(25)

# or even this, as pop removes the last value by default

our_columns.pop()BUT the problem with this solution is that you must know the index of the value you'd like to remove, and sometimes you do not know the index of the value. Instead, please show how to use a list method to remove "extra" by value rather than by index.

Relevant topics: csv read csv, break, append, indexing

Item(s) to submit:

- Python code used to solve the problem.

- The output from running your code.

Solution

our_columns = []

with open("/class/datamine/data/craigslist/vehicles.csv") as my_file:

my_reader = csv.reader(my_file)

for row in my_reader:

our_columns = row

break

our_columns.append("extra")

print(our_columns[1])

print(our_columns[::2])

print(our_columns[-4:])

print(our_columns.remove("extra"))4. matplotlib is one of the primary plotting packages in Python. You are provided with the following code:

my_values = tuple(myDF.loc[:, 'odometer'].dropna().to_list())The result is a tuple containing the odometer readings from all of the vehicles in our dataset. Create a lineplot of the odometer readings.

Well, that plot doesn't seem too informative. Let's first sort the values in our tuple:

my_values.sort()What happened? A tuple is immutable. What this means is that once the contents of a tuple are declared they cannot be modified. For example:

# This will fail because tuples are immutable

my_values[0] = 100You can read a good article about this here. In addition, here is a great post that gives you an idea when using a tuple might be a good idea. Okay, so let's go back to our problem. We know that lists are mutable (and therefore sortable), so convert my_values to a list and then sort, and re-plot.

It looks like there are some (potential) outliers that are making our plot look a little wonky. For the sake of seeing how the plot would look, use negative indexing to plot the sorted values minus the last 50 values (the 50 highest values). New new plot may not look that different, that is okay.

Hint: To prevent plotting values on the same plot, close your plot with the close method, for example:

import matplotlib.pyplot as plt

my_values = [1,2,3,4,5]

plt.plot(my_values)

plt.show()

plt.close()Relevant topics: list methods, indexing, matplotlib lineplot

Item(s) to submit:

- Python code used to solve the problem.

- The output from running your code.

Solution

import matplotlib.pyplot as plt

my_values = tuple(myDF.loc[:, 'odometer'].dropna().to_list())

plt.plot(my_values)

plt.close()

my_values = list(my_values)

my_values.sort()

plt.plot(my_values)

plt.show()

plt.close()

plt.plot(my_values[:-50])

plt.show()

plt.close()5. We've covered a lot in this project! Use what you've learned so far to do one (or more) of the following tasks:

- Create a cool graphic using matplotlib, that summarizes some data from our dataset.

- Use pandas and your investigative skills to sift through the dataset and glean an interesting factoid.

- Create some commented coding examples that highlight the differences between lists and tuples. Include at least 3 examples.

Relevant topics: pandas, indexing, matplotlib

Item(s) to submit:

- Python code used to solve the problem.

- The output from running your code.

Solution

# Could be anything.Project 3

Motivation: A dictionary (referred to as a dict) is one of the most useful data structures in Python. You can think about them as a data structure containing key: value pairs. Under the hood, a dict is essentially a data structure called a hash table. Hash tables are a data structure with a useful set of properties. The time needed for searching, inserting, or removing a piece of data has a constant average lookup time, meaning that no matter how big your hash table grows to be, inserting, searching, or deleting a piece of data will usually take about the same amount of time. (The worst case time increases linearly.) Dictionaries (dict) are used a lot, so it is worthwhile to understand them. Although not used quite as often, another important data type called a set, is also worthwhile learning about.

Dictionaries, often referred to as dicts, are really powerful. There are two primary ways to "get" information from a dict. One is to use the get method, the other is to use square brackets and strings. Test out the following to understand the differences between the two:

my_dict = {"fruits": ["apple", "orange", "pear"], "person": "John", "vegetables": ["carrots", "peas"]}

# If "person" is indeed a key, they will function the same way

my_dict["person"]

my_dict.get("person")

# If the key does not exist, like below, they will not

# function the same way.

my_dict.get("height") # Returns None when key doesn't exist

print(my_dict.get("height")) # By printing, we can see None in this case

my_dict["height"] # Throws a KeyError exception because the key, "height" doesn't existContext: In our third project, we introduce some basic data types, and we demonstrate some familiar control flow concepts, all while digging right into a dataset. Throughout the course, we will slowly introduce concepts from pandas, and popular plotting packages.

Scope: dicts, sets, if/else statements, opening files, tuples, lists

Learning objectives:

-

Explain what is a

dictis and why it is useful. -

Understand how a

setworks and when it could be useful. - List the differences between lists & tuples and when to use each.

- Gain familiarity with string methods, list methods, and tuple methods.

- Gain familiarity with dict methods.

Make sure to read about, and use the template found here, and the important information about projects submissions here.

Dataset

The following questions will use the dataset found in Scholar:

/class/datamine/data/craigslist/vehicles.csv

Questions

1. In project 2 we learned how to read in data using pandas. Read in the (/class/datamine/data/craigslist/vehicles.csv) dataset into a DataFrame called myDF using pandas. In R we can get a sneak peek at the data by doing something like:

head(myDF) # where myDF is a data.frameThere is a very similar (and aptly named method) in pandas that allows us to do the exact same thing with a pandas DataFrame. Get the head of myDF, and take a moment to consider how much time it would take to get this information if we didn't have this nice head method.

Relevant topics: pandas read_csv, head

Item(s) to submit:

- Python code used to solve the problem.

-

The

headof the dataset.

Solution

import pandas as pd

myDF = pd.read_csv("/class/datamine/data/craigslist/vehicles.csv")

myDF.head()2. Dictionaries, often referred to as dicts, are really powerful. There are two primary ways to "get" information from a dict. One is to use the get method, the other is to use square brackets and strings. Test out the following to understand the differences between the two:

my_dict = {"fruits": ["apple", "orange", "pear"], "person": "John", "vegetables": ["carrots", "peas"]}

# If "person" is indeed a key, they will function the same way

my_dict["person"]

my_dict.get("person")

# If the key does not exist, like below, they will not

# function the same way.

my_dict.get("height") # Returns None when key doesn't exist

print(my_dict.get("height")) # By printing, we can see None in this case

my_dict["height"] # Throws a KeyError exception because the key, "height" doesn't existLook at the dataset. Create a dict called my_dict that contains key:value pairs where the keys are years, and the values are a single int representing the number of vehicles from that year on craigslist. Use the year column, a loop, and a dict to accomplish this. Print the dictionary. You can use the following code to extract the year column as a list. In the next project we will learn how to loop over pandas DataFrames.

Hint: If you get a KeyError, remember, you must declare each key value pair just like any other variable. Use the following code to initialize each year key to the value 0.

myyears = myDF['year'].dropna().to_list()

# get a list containing each unique year

unique_years = list(set(myyears))

# for each year (key), initialize the value (value) to 0

my_dict = {}

for year in unique_years:

my_dict[year] = 0Hint: Here are some of the results you should get:

print(my_dict[1912]) # 5

print(my_dict[1982]) # 185

print(my_dict[2014]) # 31703Note: There is a special kind of dict called a defaultdict, that allows you to give default values to a dict, giving you the ability to "skip" initialization. We will show you this when we release the solutions to this project! It is not required, but it is interesting to know about!

Relevant topics: dicts

Item(s) to submit:

- Python code used to solve the problem.

-

my_dictprinted.

Solution

years = myDF['year'].dropna().to_list()

unique_years = list(set(years))

my_dict = {}

for year in unique_years:

my_dict[year] = 0

for year in years:

my_dict[year] += 1

print(my_dict)OR

from collections import defaultdict

years = myDF['year'].dropna().to_list()

my_dict = defaultdict(int)

for year in years:

my_dict[year] += 1

print(my_dict)3. After completing question (2) you can easily access the number of vehicles from a given year. For example, to get the number of vehicles on craigslist from 1912, just run:

my_dict[1912]

# or

my_dict.get(1912)A dict stores its data in key:value pairs. Identify a "key" from my_dict, as well as the associated "value". As you can imagine, having data in this format can be very beneficial. One benefit is the ability to easily create a graphic using matplotlib. Use matplotlib to create a bar graph with the year on the x-axis, and the number of vehicles from that year on the y-axis.

Important note: If when you end up seeing something like <BarContainer object of X artists>, you should probably end the code chunk with plt.show() instead. What is happening is Python is trying to print the plot object. That text is the result. To instead display the plot you need to call plt.show().

Hint: To use matplotlib, first import it:

import matplotlib.pyplot as plt

# now you can use it, for example

plt.plot([1,2,3,1])

plt.show()

plt.close()Hint: The keys method and values method from dict could be useful here.

Relevant topics: dicts, matplotlib, barplot

Item(s) to submit:

- Python code used to solve the problem.

- The resulting plot.

-

A sentence giving an example of a "key" and associated "value" from

my_dict(e.g., a sentence explaining the 1912 example above).

Solution

In my_dict a key is 1912, and the associated value is 5.

import matplotlib.pyplot as plt

plt.bar(my_dict.keys(), my_dict.values())4. In the hint in question (2), we used a set to quickly get a list of unique years in a list. Some other common uses of a set are when you want to get a list of values that are in one list but not another, or get a list of values that are present in both lists. Examine the following code. You'll notice that we are looping over many values. Replace the code for each of the three examples below with code that uses no loops whatsoever.

listA = [1, 2, 3, 4, 5, 6, 12, 12]

listB = [2, 1, 7, 7, 7, 2, 8, 9, 10, 11, 12, 13]

# 1. values in list A but not list B

# values in list A but not list B

onlyA = []

for valA in listA:

if valA not in listB and valA not in onlyA:

onlyA.append(valA)

print(onlyA) # [3, 4, 5, 6]

# 2. values in listB but not list A

onlyB = []

for valB in listB:

if valB not in listA and valB not in onlyB:

onlyB.append(valB)

print(onlyB) # [7, 8, 9, 10, 11, 13]

# 3. values in both lists

# values in both lists

in_both_lists = []

for valA in listA:

if valA in listB and valA not in in_both_lists:

in_both_lists.append(valA)

print(in_both_lists) # [1,2,12]Hint: You should use a set.

Note: In addition to being easier to read, using a set is much faster than loops!

Note: A set is a group of values that are unordered, unchangeable, and no duplicate values are allowed. While they aren't used a lot, they can be useful for a few common tasks like: removing duplicate values efficiently, efficiently finding values in one group of values that are not in another group of values, etc.

Relevant topics: sets

Item(s) to submit:

- Python code used to solve the problem.

- The output from running the code.

Solution

listA = [1, 2, 3, 4, 5, 6, 12, 12]

listB = [2, 1, 7, 7, 7, 2, 8, 9, 10, 11, 12, 13]

onlyA = list(set(listA) - set(listB))

print(onlyA)

onlyB = list(set(listB) - set(listA))

print(onlyB)

in_both_lists = list(set.intersection(set(listA), set(listB)))

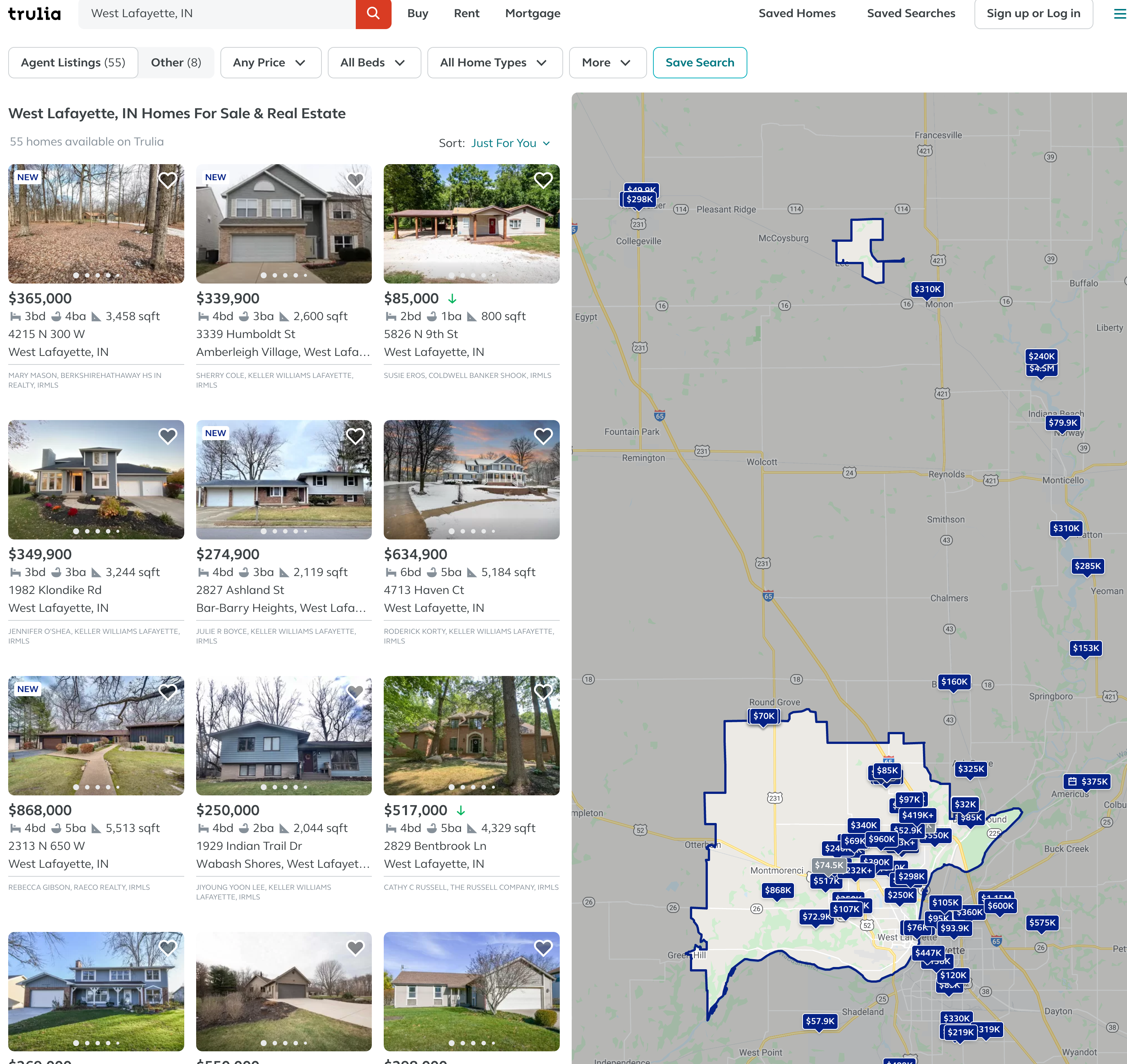

print(in_both_lists)5. The value of a dictionary does not have to be a single value (like we've shown so far). It can be anything. Observe that there is latitude and longitude data for each row in our DataFrame (lat and long, respectively). Wouldn't it be useful to be able to quickly "get" pairs of latitude and longitude data for a given state?

First, run the following code to get a list of tuples where the first value is the state, the second value is the lat, and the third value is the long.

states_list = list(myDF.loc[:, ["state", "lat", "long"]].dropna().to_records(index=False))

states_list[0:3] # [('az', 34.4554, -114.269), ('or', 46.1837, -123.824), ('sc', 34.9352, -81.9654)]

# to get the first tuple

states_list[0] # ('az', 34.4554, -114.269)

# to get the first value in the first tuple

states_list[0][0] # az

# to get the second tuple

states_list[1] # ('or', 46.1837, -123.824)

# to get the first value in the second tuple

states_list[1][0] # orHint: If you have an issue where you cannot append values to a specific key, make sure to first initialize the specific key to an empty list so the append method is available to use.

Now, organize the latitude and longitude data in a dictionary called geoDict such that each state from the state column is a key, and the respective value is a list of tuples, where the first value in each tuple is the latitude (lat) and the second value is the longitude (long). For example, the first 2 (lat,long) pairs in Indiana ("in") are:

geoDict.get("in")[0:2] # [(39.0295, -86.8675), (38.8585, -86.4806)]

len(geoDict.get("in")) # 5687Now that you can easily access latitude and longitude pairs for a given state, run the following code to plot the points for Texas (the state value is "tx"). Include the the graphic produced below in your solution, but feel free to experiment with other states.

NOTE: You do NOT need to include this portion of Question 5 in your Markdown .Rmd file. We cannot get this portion to build in Markdown, but please do include it in your Python .py file.

from shapely.geometry import Point

import geopandas as gpd

from geopandas import GeoDataFrame

usa = gpd.read_file('/class/datamine/data/craigslist/cb_2018_us_state_20m.shp')

usa.crs = {'init': 'epsg:4269'}

pts = [Point(y,x) for x, y in geoDict.get("tx")]

gdf = gpd.GeoDataFrame(geometry=pts, crs = 4269)

fig, gax = plt.subplots(1, figsize=(10,10))

base = usa[usa['NAME'].isin(['Hawaii', 'Alaska', 'Puerto Rico']) == False].plot(ax=gax, color='white', edgecolor='black')

gdf.plot(ax=base, color='darkred', marker="*", markersize=10)

plt.show()

plt.close()

# to save to jpg:

plt.savefig('q5.jpg')Relevant topics: dicts, lists and tuples

Item(s) to submit:

- Python code used to solve the problem.

-

Graphic file (

q5.jpg) produced for the given state.

Solution

geoDict = dict()

for val in states_list:

geoDict[val[0]] = []

for val in states_list:

geoDict[val[0]].append((val[1],val[2]))

geoDict.get("in")[0:2]

len(geoDict.get("in"))from shapely.geometry import Point

import geopandas as gpd

from geopandas import GeoDataFrame

usa = gpd.read_file('/class/datamine/data/craigslist/cb_2018_us_state_20m.shp')

usa.crs = {'init': 'epsg:4269'}

pts = [Point(y,x) for x, y in geoDict.get("tx")]

gdf = gpd.GeoDataFrame(geometry=pts, crs = 4269)

fig, gax = plt.subplots(1, figsize=(10,10))

base = usa[usa['NAME'].isin(['Hawaii', 'Alaska', 'Puerto Rico']) == False].plot(ax=gax, color='white', edgecolor='black')

gdf.plot(ax=base, color='darkred', marker="*", markersize=10)

plt.show()

# to save to jpg:

plt.savefig('q5.jpg')6. Use your new skills to extract some sort of information from our dataset and create a graphic. This can be as simple or complicated as you are comfortable with!

Relevant topics: dicts, lists and tuples

Item(s) to submit:

- Python code used to solve the problem.

- The graphic produced using the code.

Solution

Could be anything.

Project 4

Motivation: We've now been introduced to a variety of core Python data structures. Along the way we've touched on a bit of pandas, matplotlib, and have utilized some control flow features like for loops and if statements. We will continue to touch on pandas and matplotlib, but we will take a deeper dive in this project and learn more about control flow, all while digging into the data!

Context: We just finished a project where we were able to see the power of dictionaries and sets. In this project we will take a step back and make sure we are able to really grasp control flow (if/else statements, loops, etc.) in Python.

Scope: python, dicts, lists, if/else statements, for loops

Learning objectives:

- List the differences between lists & tuples and when to use each.

- Explain what is a dict and why it is useful.

- Demonstrate a working knowledge of control flow in python: if/else statements, while loops, for loops, etc.

Make sure to read about, and use the template found here, and the important information about projects submissions here.

Dataset

The following questions will use the dataset found in Scholar:

/class/datamine/data/craigslist/vehicles.csv

Questions

1. Unlike in R, where traditional loops are rare and typically accomplished via one of the apply functions, in Python, loops are extremely common and important to understand. In Python, any iterator can be looped over. Some common iterators are: tuples, lists, dicts, sets, pandas Series, and pandas DataFrames. In the previous project we had some examples of looping over lists, let's learn how to loop over pandas Series and Dataframes!

Load up our dataset /class/datamine/data/craigslist/vehicles.csv into a DataFrame called myDF. In project (3), we organized the latitude and longitude data in a dictionary called geoDict such that each state from the state column is a key, and the respective value is a list of tuples, where the first value in each tuple is the latitude (lat) and the second value is the longitude (long). Repeat this question, but do not use lists, instead use pandas to accomplish this.

Hint: The data frame has 435,849 rows, and it takes forever to accomplish this with pandas. We just want you to do this one time, to see how slow this is. Try it first with only 10 rows, and then with 100 rows, and once you are sure it is working, try it with (say) 20,000 rows. You do not need to do this with the entire data frame. It takes too long!

Relevant topics: DataFrame.iterrows(),

Item(s) to submit:

- Python code used to solve the problem.

- Output from running your code.

Solution

import pandas as pd

myDF = pd.read_csv("/class/datamine/data/craigslist/vehicles.csv")

geoDict = dict()

for my_index, my_row in myDF.iterrows():

geoDict[my_row["state"]] = []

for my_index, my_row in myDF.iterrows():

geoDict[my_row["state"]].append((my_row["lat"], my_row["long"]))

geoDict.get("in")[0:2]

len(geoDict.get("in"))2. Wow! The solution to question (1) was slow. In general, you'll want to avoid looping over large DataFrames. Here is a pretty good explanation of why, as well as a good system on what to try when computing something. In this case, we could have used indexing to get latitude and longitude values for each state, and would have no need to build this dict.

The method we learned in Project 3, Question 5 is faster and easier! Just in case you did not solve Project 3, Question 5, here is a fast way to build geoDict:

import pandas as pd

myDF = pd.read_csv("/class/datamine/data/craigslist/vehicles.csv")

states_list = list(myDF.loc[:, ["state", "lat", "long"]].dropna().to_records(index=False))

geoDict = {}

for mytriple in states_list:

geoDict[mytriple[0]] = []

for mytriple in states_list:

geoDict[mytriple[0]].append( (mytriple[1],mytriple[2]) )Now we will practice iterating over a dictionary, list, and tuple, all at once! Loop through geoDict and use f-strings to print the state abbreviation. Print the first latitude and longitude pair, as well as every 5000th latitude and longitude pair for each state. Round values to the hundreths place. For example, if the state was "pu", and it had 12000 latitude and longitude pairs, we would print the following:

pu:

Lat: 41.41, Long: 41.41

Lat: 22.21, Long: 21.21

Lat: 11.11, Long: 10.22In the above example, Lat: 41.41, Long: 41.41 would be the 0th pair, Lat: 22.21, Long: 21.21 would be the 5000th pair, and Lat: 11.11, Long: 10.22 would be the 10000th pair. Make sure to use f-strings to round the latitude and longitude values to two decimal places.

There are several ways to solve this question. You can use whatever method is easiest for you, but please be sure (as always) to add comments to explain your method of solution.

Hint: Enumerate is a useful function that adds an index to our loop.

Hint: Using an if statement and the modulo operator could be useful.

Note: Whenever we have a loop within another loop, the "inner" loop is called a "nested" loop, as it is "nested" inside of the other.

Relevant topics: dicts, modulus operator, f-strings, if/else, for loops

Item(s) to submit:

- Python code used to solve the problem.

- Output from running your code.

Solution

for key, value in geoDict.items():

print(f'{key}:')

for idx, triplet in enumerate(value):

if (idx % 5000 == 0):

print(f'Lat: {triplet[0]:.2f}, Long: {triplet[1]:.2f}')3. We are curious about how the year of the car (year) effects the price (price). In R, we could get the median price by year easily, using tapply:

tapply(myDF$price, myDF$year, median, na.rm=T)Using pandas, we would do this:

res = myDF.groupby(['year'], dropna=True).median()These are very convenient functions that do a lot of work for you. If we were to take a look at the median price of cars by year, it would look like:

import matplotlib.pyplot as plt

res = myDF.groupby(['year'], dropna=True).median()["price"]

plt.bar(res.index, res.values)Using the content of the variable my_list provided in the code below, calculate the median car price per year without using the median function and without using a sort function. Use only dictionaries, for loops and if statements. Replicate the plot generated by running the code above (you can use the plot to make sure it looks right).

my_list = list(myDF.loc[:, ["year", "price",]].dropna().to_records(index=False))Hint: If you do not want to write your own median function to find the median, then it is OK to just use the getMid function found here or to use a median function from elsewhere on the web. Just be sure to cite your source, if you do use a median function that someone else provides or that you use from the internet. There are many small variations on median functions, especially when it comes to (for instance) lists with even length.

Hint: It is also OK to use: import statistics and the function statistics.median

Relevant topics: dicts, for loops, if/else

Item(s) to submit:

- Python code used to solve the problem.

- Output from running your code.

- The barplot.

Solution

from collections import defaultdict

import matplotlib.pyplot as plt

my_list = list(myDF.loc[:, ["year", "price",]].dropna().to_records(index=False))

my_dict = defaultdict(list)

for (year, price) in my_list:

my_dict[year].append(float(price))

my_years, my_prices = [],[]

for year, prices in sorted(my_dict.items()):

if len(prices) % 2 == 0:

lower_mid = int(len(prices)/2 - 1)

upper_mid = int(len(prices)/2)

prices_sorted = sorted(prices)

median_price = (prices_sorted[lower_mid] + prices_sorted[upper_mid]) / 2

else:

median_price = sorted(prices)[int(len(prices)/2)]

print(f'{year}: {median_price}')

my_years.append(year)

my_prices.append(median_price)

plt.bar(my_years, my_prices)

plt.show()4. Now calculate the mean price by year(still not using pandas code), and create a barplot with the price on the y-axis and year on the x-axis. Whoa! Something is odd here. Explain what is happening. Modify your code to use an if statement to "weed out" the likely erroneous value. Re-plot your values.

Click here for video (same as in Question 3)

Hint: It is also OK to use a built-in mean function, for instace: import statistics and the function statistics.mean

Relevant topics: sorted, for loops, if/else, list methods

Item(s) to submit:

- Python code used to solve the problem.

- Output from running your code.

- The barplot.

Solution

from collections import defaultdict

my_dict = defaultdict(list)

for (year, price) in my_list:

my_dict[year].append(price)

my_years, my_prices = [],[]

for year, prices in sorted(my_dict.items()):

print(f'{year}: {sum(prices)/len(prices)}')

my_years.append(year)

my_prices.append(sum(prices)/len(prices))

plt.bar(my_years, my_prices)

plt.show()from collections import defaultdict

my_dict = defaultdict(list)

for (year, price) in my_list:

if price > 1_000_000:

continue

my_dict[year].append(price)

my_years, my_prices = [],[]

for year, prices in sorted(my_dict.items()):

avg_price = sum(prices)/len(prices)

print(f'{year}: {avg_price}')

my_years.append(year)

my_prices.append(avg_price)

plt.bar(my_years, my_prices)

plt.show()5. List comprehensions are a neat feature of Python that allows for a more concise syntax for smaller loops. While at first they may seem difficult and more confusing, eventually they grow on you. For example, say you wanted to capitalize every state in a list full of states:

my_states = myDF['state'].to_list()

my_states = [state.upper() for state in my_states]Or, maybe you wanted to find the average price of cars in "excellent" condition (without pandas):

my_list = list(myDF.loc[:, ["condition", "price",]].dropna().to_records(index=False))

my_list = [price for (condition, price) in my_list if condition == "excellent"]

sum(my_list)/len(my_list)Do the following using list comprehensions, and the provided code:

my_list = list(myDF.loc[:, ["state", "price",]].dropna().to_records(index=False))- Calculate the average price of vehicles from Indiana (

in). - Calculate the average price of vehicles from Indiana (

in), Michigan (mi), and Illinois (il) combined.

my_list = list(myDF.loc[:, ["manufacturer", "year", "price",]].dropna().to_records(index=False))- Calculate the average price of a "honda" (

manufacturer) that is 2010 or newer (year).

Relevant topics: sorted, for loops, if/else, list methods, sum, len, defaultdict, matplotlib

Item(s) to submit:

- Python code used to solve the problem.

- Output from running your code.

Solution

my_list = list(myDF.loc[:, ["state", "price",]].dropna().to_records(index=False))

my_list = [price for (state,price) in my_list if state == "in"]

print(sum(my_list)/len(my_list))

my_list = list(myDF.loc[:, ["state", "price",]].dropna().to_records(index=False))

my_list = [price for (state,price) in my_list if state in ('in', 'il', 'mi')]

print(sum(my_list)/len(my_list))

my_list = list(myDF.loc[:, ["manufacturer", "year", "price",]].dropna().to_records(index=False))

my_list = [p for (m, y, p) in my_list if m=='honda' and y >= 2010]

print(sum(my_list)/len(my_list))6. Let's use a package called spacy to try and parse phone numbers out of the description column. First, simply loop through and print the text and the label. What is the label of the majority of the phone numbers you can see?

import spacy

# get list of descriptions

my_list = list(myDF.loc[:, ["description",]].dropna().to_records(index=False))

my_list = [m[0] for m in my_list]

# load the pre-built spacy model

nlp = spacy.load("en_core_web_lg")

# apply the model to a description

doc = nlp(my_list[0])

# print the text and label of each "entity"

for entity in doc.ents:

print(entity.text, entity.label_)Use an if statement to filter out all entities that are not the label you see. Loop through again and see what our printed data looks like. There is still a lot of data there that we don't want to capture, right? Phone numbers in the US are usually 7 (5555555), 8 (555-5555), 10 (5555555555), 11 (15555555555), 12 (555-555-5555), or 14 (1-555-555-5555) digits. In addition to your first "filter", add another "filter" that keeps only text where the text is one of those lengths.

That is starting to look better, but there are still some erroneous values. Come up with another "filter", and loop through our data again. Explain what your filter does and make sure that it does a better job on the first 10 documents than when we don't use your filter.

Note: If you get an error when trying to knit that talks about "unicode" characters, this is caused by trying to print special characters (non-ascii). An easy fix is just to remove all non-ascii text. You can do this with the encode string method. For example:

Instead of:

for entity in doc.ents:

print(entity.text, entity.label_)Do:

for entity in doc.ents:

print(entity.text.encode('ascii', errors='ignore'), entity.label_)Note: It can be fun to utilize machine learning and natural language processing, but that doesn't mean it is always the best solution! We could get rid of all of our filters and use regular expressions with much better results! We will demonstrate this in our solution.

Relevant topics: for loops

Item(s) to submit:

- Python code used to solve the problem.

- Output from running your code.

- 1-2 sentences explaining what your filter does.

Solution

import spacy

my_list = list(myDF.loc[:, ["description",]].dropna().to_records(index=False))

my_list = [m[0] for m in my_list]

# load the pre-built spacy model

nlp = spacy.load("en_core_web_lg")

for doc in my_list[:10]:

d = nlp(doc)

for entity in d.ents:

print(entity.text.encode('ascii', errors='ignore'), entity.label_)

for doc in my_list[:10]:

d = nlp(doc)

for entity in d.ents:

if entity.label_ == "CARDINAL":

print(entity.text.encode('ascii', errors='ignore'), entity.label_)

for doc in my_list[:10]:

d = nlp(doc)

for entity in d.ents:

if entity.label_ == "CARDINAL" and len(entity.text) in [7, 8, 10, 11, 12, 14]:

print(entity.text.encode('ascii', errors='ignore'), entity.label_)

for doc in my_list[:10]:

d = nlp(doc)

for entity in d.ents:

if entity.label_ == "CARDINAL" and len(entity.text) in [7, 8, 10, 11, 12, 14]:

print(entity.text.encode('ascii', errors='ignore'), entity.label_)

import re

pattern = '\(?([0-9]{3})?\)?[-.]?([0-9]{3})[-.]?([0-9]{4})'

for doc in my_list[:10]:

if matches := re.finditer(pattern, doc):

for match in matches:

print(match.group())Project 5

Motivation: Up until this point we've utilized bits and pieces of the pandas library to perform various tasks. In this project we will formally introduce pandas and numpy, and utilize their capabilities to solve data-driven problems.

Context: By now you'll have had some limited exposure to pandas. This is the first in a three project series that covers some of the main components of both the numpy and pandas libraries. We will take a two project intermission to learn about functions, and then continue.

Scope: python, pandas, numpy, DataFrames, Series, ndarrays, indexing

Learning objectives:

- Distinguish the differences between numpy, pandas, DataFrames, Series, and ndarrays.

- Use numpy, scipy, and pandas to solve a variety of data-driven problems.

- Demonstrate the ability to read and write data of various formats using various packages.

- View and access data inside DataFrames, Series, and ndarrays.

Make sure to read about, and use the template found here, and the important information about projects submissions here.

Dataset

The following questions will use the dataset found in Scholar:

/class/datamine/data/stackoverflow/unprocessed/2018.csv

/class/datamine/data/stackoverflow/unprocessed/2018.parquet

/class/datamine/data/stackoverflow/unprocessed/2018.feather

Questions

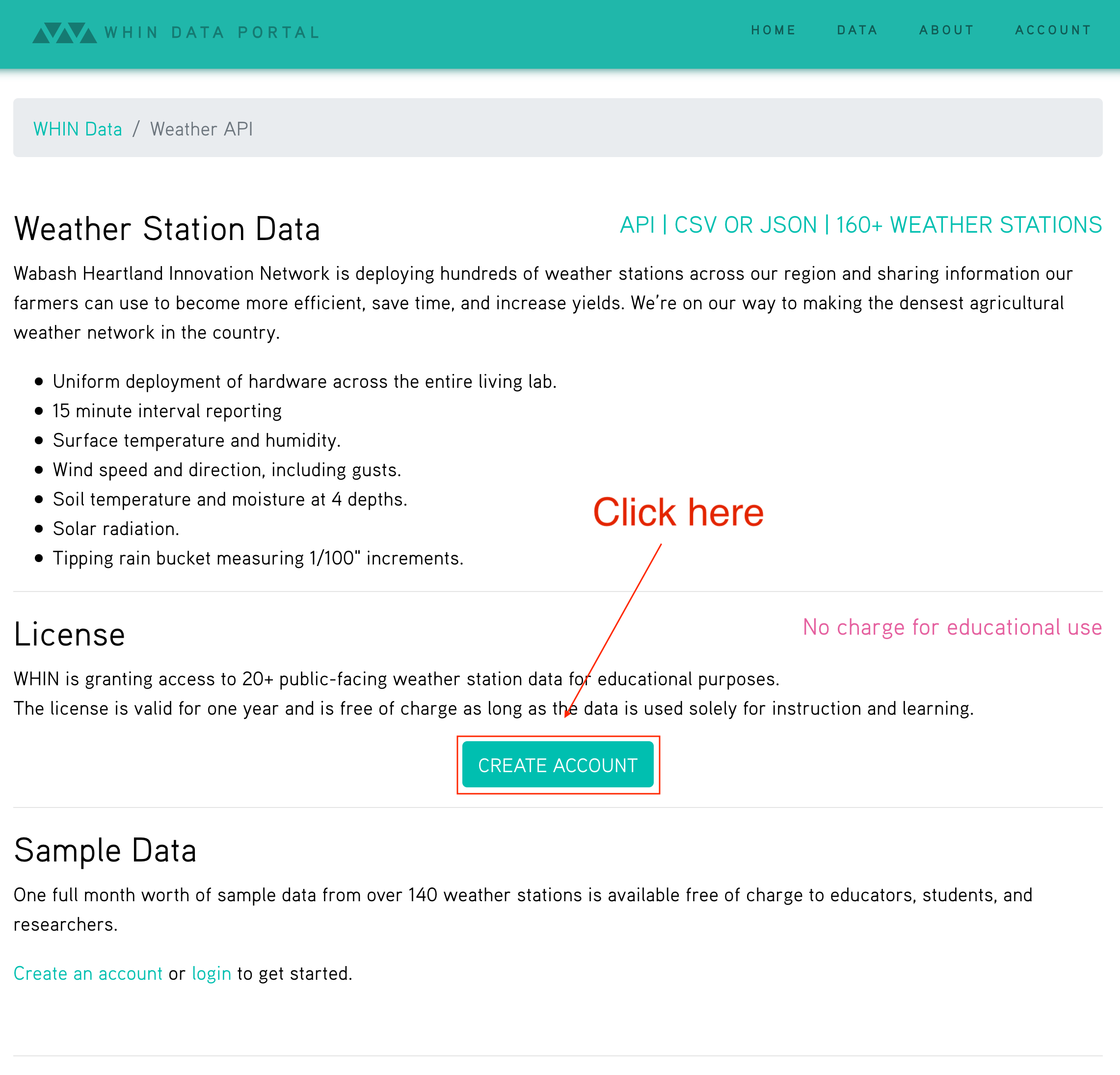

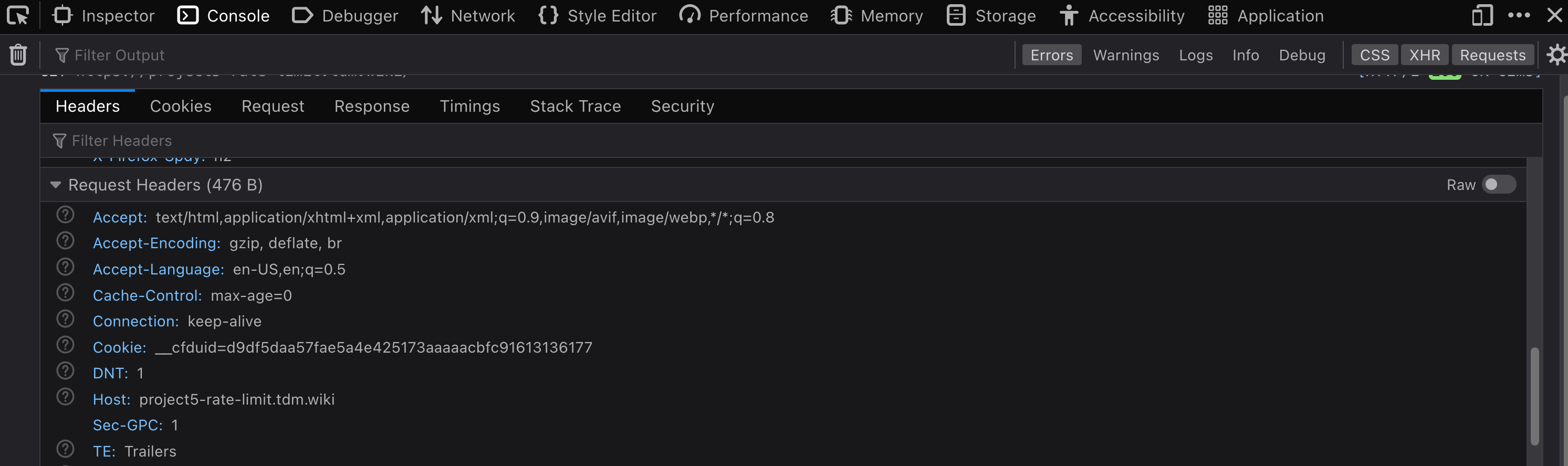

1. Take a look at the pandas docs. There are a lot of formats that pandas has the ability to read. The most popular formats in this course are: csv (with commas or some other separator), excel, json, or some database. CSV is very prevalent, but it was not designed to work well with large amounts of data. Newer formats like parquet and feather are designed from the ground up to be efficient, and take advantage of special processor instruction set called SIMD. The benefits of using these formats can be significant. Let's do some experiments!

How much space do each of the following files take up on Scholar: 2018.csv, 2018.parquet, and 2018.feather? How much smaller (as a percentage) is the parquet file than the csv? How much smaller (as a percentage) is the feather file than the csv? Use f-strings to format the percentages.

Time reading in the following files: 2018.csv, 2018.parquet, and 2018.feather. How much faster (as a percentage) is reading the parquet file than the csv? How much faster (as a percentage) is reading the feather file than the csv? Use f-strings to format the percentages.

To time a piece of code, you can use the block-timer package:

from block_timer.timer import Timer

with Timer(title="Using dict to declare a dict") as t1:

my_dict = dict()

with Timer(title="Using {} to declare a dict") as t2:

my_dict = {}

# or if you need more fine-tuned values

print(t1.elapsed)

print(t2.elapsed)Read the 2018.csv file into a pandas DataFrame called my2018. Time writing the contents of my2018 to the following files: 2018.csv, 2018.parquet, and 2018.feather. Write the files to your scratch directory: /scratch/scholar/<username>, where <username> is your username. How much faster (as a percentage) is writing the parquet file than the csv? How much faster (as a percentage) is writing the feather file than the csv? Use f-strings to format the percentages.

Relevant topics: pandas read_csv, pandas to_csv

Item(s) to submit:

- Python code used to solve the problem.

- Output from running your code.

Solution

import pandas as pd

from block_timer.timer import Timer

with Timer(title="csv") as csv:

myDF = pd.read_csv("/class/datamine/data/stackoverflow/unprocessed/2018.csv")

with Timer(title="parquet") as parquet:

myDF = pd.read_parquet("/class/datamine/data/stackoverflow/unprocessed/2018.parquet")

with Timer(title="feather") as feather:

myDF = pd.read_feather("/class/datamine/data/stackoverflow/unprocessed/2018.feather")

print(f'Reading parquet is {csv.elapsed/parquet.elapsed-1.0:.2%} faster than csv.')

print(f'Reading feather is {csv.elapsed/feather.elapsed-1.0:.2%} faster than csv.')from block_timer.timer import Timer

myDF = pd.read_csv("/class/datamine/data/stackoverflow/unprocessed/2018.csv")

with Timer(title="csv") as csv:

myDF.to_csv("/scratch/scholar/kamstut/2018.csv")

with Timer(title="parquet") as parquet:

myDF.to_parquet("/scratch/scholar/kamstut/2018.parquet")

with Timer(title="feather") as feather:

myDF.to_feather("/scratch/scholar/kamstut/2018.feather")

print(f'Writing parquet is {csv.elapsed/parquet.elapsed-1.0:.2%} faster than csv.')

print(f'Writing feather is {csv.elapsed/feather.elapsed-1.0:.2%} faster than csv.')from pathlib import Path

csv = Path('/class/datamine/data/stackoverflow/unprocessed/2018.csv').stat().st_size

parquet = Path('/class/datamine/data/stackoverflow/unprocessed/2018.parquet').stat().st_size

feather = Path('/class/datamine/data/stackoverflow/unprocessed/2018.feather').stat().st_size

print(f'The parquet file is {csv/parquet:.2%} smaller than csv.')

print(f'Writing feather is {csv/feather:.2%} smaller than csv.')2. A method is just a function associated with an object or class. For example, mean is just a method of the pandas DataFrame:

# myDF is an object of class DataFrame

# mean is a method of the DataFrame class

myDF.mean()In pandas there are two main methods used for indexing: loc and iloc. Use the column Student and indexing in pandas to calculate what percentage of respondents are students and not students. Consider the respondent to be a student if the Student column is anything but "No". Create a new DataFrame called not_students that is a subset of the original dataset without students.

Relevant topics: loc/iloc/indexing

Item(s) to submit:

- Python code used to solve the problem.

- Output from running your code.

Solution

not_students = myDF.loc[myDF.loc[:,"Student"]=="No",:]

not_student_percent = len(not_students.loc[:, "Respondent"])/len(myDF.loc[:, "Respondent"])

student_percent = 1-not_student_percent

print(f"Percent not students: {not_student_percent}")

print(f"Percent students: {student_percent}")3. In pandas, if you were to isolate a single column using indexing, like this:

myDF.loc[:, "Student"]The result would be a pandas Series. A Series is the 1-dimensional equivalent of a DataFrame.

type(myDF.loc[:, "Student"]) # pandas.core.series.Seriespandas and numpy make it very easy to convert between a Series, ndarray, and list. Here is a very useful graphic to highlight how to do this. Look at the DevType column in not_students. As you can see, a single value may contain a list of semi-colon-separated professions. Create a list with a unique group of all the possible professions. Consider each semi-colon-separated value a profession. How many professions are there?

It looks like somehow the profession "Student" got in there even though we filtered by the Student column. Use not_students to get a subset of our data for which the respondents replied "No" to Student, yet put "Student" as one of many possible DevTypes. How many respondents are in that subset?

Hint: If you have a column containing strings in pandas, and would like to use string methods on every string in the column, you can use .str. For example:

# this would use the `strip` string method on each value in myColumn, and compare them to ''

# `contains` is another useful string method...

myDF.loc[myDF.loc[:, "myColumn"].str.strip() == '', :]Hint: See here.

Relevant topics: list comprehensions, for loops, loc/iloc/indexing

Item(s) to submit:

- Python code used to solve the problem.

- Output from running your code.

- The number of professions there are.

-

The number of respondents that replied "No" to

Student, yet put "Student" as theDevType.

Solution

professions = [p.split(";") for p in not_students.loc[:, "DevType"].dropna().tolist()]

# unnest the nested lists

professions = [p for li in professions for p in li]

professions = list(set(professions))

print(professions)

print(len(professions))result = myDF.loc[(myDF.loc[:, "Student"]=="No") & (myDF.loc[:, "DevType"].str.contains("Student")),:]

len(result)4. As you can see, while perhaps a bit more strict, indexing in pandas is not that much more difficult than indexing in R. While not always necessary, remembering to put ":" to indicate "all columns" or "all rows" makes life easier. In addition, remembering to put parentheses around logical groupings is also a good thing. Practice makes perfect! Randomly select 100 females and 100 males. How many of each sample is in each Age category? (Do not use the sample method yet, but instead use numeric indexing and random)

import random

print(f"A random integer between 1 and 100 is {random.randint(1, 101)}")## A random integer between 1 and 100 is 37It would be nice to visualize these results. pandas Series have some built in methods to create plots. Use [this] method to generate a bar plot for both females and males. How do they compare?

Hint: You may need to import matplotlib in order to display the graphic:

import matplotlib.pyplot as plt

# female barplot code here

plt.show()

# male barplot code here

plt.show()Hint: Once you have your female and male DataFrames, the value_counts method found here may be particularly useful.

Relevant topics: list comprehensions, for loops, loc/iloc/indexing, barplot

Item(s) to submit:

- Python code used to solve the problem.

- Output from running your code.

Solution

import random

import matplotlib.pyplot as plt

males = myDF.loc[(myDF.loc[:, "Gender"]=="Male"), :]

random_indices = [random.randint(0, len(males)) for i in range(0,100)]

males = males.iloc[random_indices, ]

print(males.loc[:,"Age"].value_counts())

females = myDF.loc[(myDF.loc[:, "Gender"]=="Female"), :]

random_indices = [random.randint(0, len(females)) for i in range(0,100)]

females = females.iloc[random_indices]

print(females.loc[:,"Age"].value_counts())

males.loc[:,"Age"].value_counts().plot.bar()

plt.show()

females.loc[:,"Age"].value_counts().plot.bar()

plt.show()5. pandas really helps out when it comes to working with data in Python. This is a really cool dataset, use your newfound skills to do a mini-analysis. Your mini-analysis should include 1 or more graphics, along with some interesting observation you made while exploring the data.

Relevant topics:

Item(s) to submit:

- Python code used to solve the problem.

- Output from running your code.

- A graphic.

- 1-2 sentences explaining your interesting observation and graphic.

Solution

Could be anything.

Project 6

Motivation: Being able to analyze and create good visualizations is a skill that is invaluable in many fields. It can be pretty fun too! In this project, we are going to take a small hiatus from the regular stream of projects to do some data visualizations.

Context: We've been working hard all semester and learning valuable skills. In this project we are going to ask you to examine some plots, write a little bit, and use your creative energies to create good visualizations about the flight data.

Scope: python, r, visualizing data

Learning objectives:

- Demostrate the ability to create basic graphs with default settings.

- Demonstrate the ability to modify axes labels and titles.

- Demonstrate the ability to customize a plot (color, shape/linetype).

Make sure to read about, and use the template found here, and the important information about projects submissions here.

Dataset

The following questions will use the dataset found in Scholar:

/class/datamine/data/flights/*.csv (all csv files)

Questions

2. Creating More Effective Graphs by Dr. Naomi Robbins and The Elements of Graphing Data by Dr. William Cleveland at Purdue University, are two excellent books about data visualization. Read the following excerpts from the books (respectively), and list 2 things you learned, or found interesting from each book.

Item(s) to submit:

- Two bullets for each book with items you learned or found interesting.

3. Of the 7 posters with at least 3 plots and/or maps, choose 1 poster that you think you could improve upon or "out plot". Create 4 plots/maps that either:

- Improve upon a plot from the poster you chose, or

- Show a completely different plot that does a good job of getting an idea or observation across, or

- Ruin a plot. Purposefully break the best practices you've learned about in order to make the visualization misleading. (limited to 1 of the 4 plots)

For each plot/map where you choose to do (1), include 1-2 sentences explaining what exactly you improved upon and how. Point out some of the best practices from the 2 provided texts that you followed. For each plot/map where you choose to do (2), include 1-2 sentences explaining your graphic and outlining the best practices from the 2 texts that you followed. For each plot/map where you choose to do (3), include 1-2 sentences explaining what you changed, what principle it broke, and how it made the plot misleading or worse.

While we are not asking you to create a poster, please use RMarkdown to keep your plots, code, and text nicely formatted and organized. The more like a story your project reads, the better. You are free to use either R or Python or both to complete this project. Please note that it would be unadvisable to use an interactive plotting package like plotly, as these packages will not render plots from within RMarkdown in RStudio.

Some useful R packages:

- base R functions: bar, plot, lines, etc.

- usmap

- ggplot

Some useful Python packages:

Item(s) to submit:

- All associated R/Python code you used to wrangling the data and create your graphics.

- 4 plots, with at least 4 associated RMarkdown code chunks.

- 1-2 sentences per plot explaining what exactly you improved upon, what best practices from the texts you used, and how. If it is a brand new visualization, describe and explain your graphic, outlining the best practices from the 2 texts that you followed. If it is the ruined plot you chose, explain what you changed, what principle it broke, and how it made the plot misleading or worse.

4. Now that you've been exploring data visualization, copy, paste, and update your first impressions from question (1) with your updated impressions. Which impression changed the most, and why?

Item(s) to submit:

- 8 bullets with updated impressions (still just a sentence or two) from question (1).

- A sentence explaining which impression changed the most and why.

Project 7

Motivation: There is one pretty major topic that we have yet to explore in Python -- functions! A key component to writing efficient code is writing functions. Functions allow us to repeat and reuse coding steps that we used previously, over and over again. If you find you are repeating code over and over, a function may be a good way to reduce lots of lines of code.

Context: We are taking a small hiatus from our pandas and numpy focused series to learn about and write our own functions in Python!

Scope: python, functions, pandas

Learning objectives:

- Comprehend what a function is, and the components of a function in Python.

- Differentiate between positional and keyword arguments.

Make sure to read about, and use the template found here, and the important information about projects submissions here.

Dataset

The following questions will use the dataset found in Scholar:

/class/datamine/data/yelp/data/parquet

Questions

1. You've been given a path to a folder for a dataset. Explore the files. Give a brief description of the files and what each file contains.

Note: Take a look at the size of each of the files. If you are interested in experimenting, try using pandas read_json function to read the yelp_academic_dataset_user.json file in the json folder /class/datamine/data/yelp/data/json/yelp_academic_dataset_user.json. Even with the large amount of memory available to you, this should fail. In order to make it work you would need to use the chunksize option to read the data in bit by bit. Now consider that the reviews.parquet file is .3gb larger than the yelp_academic_dataset_user.json file, but can be read in with no problem. That is seriously impressive!

Relevant topics: read_parquet

Item(s) to submit:

- Python code used to solve the problem.

- Output from running your code.

- The name of each dataset and a brief summary of each dataset. No more than 1-2 sentences about each dataset.

Solution

2. Read the businesses.parquet file into a pandas DataFrame called businesses. Take a look to the hours and attributes columns. If you look closely, you'll observe that both columns contain a lot more than a single feature. In fact, the attributes column contains 39 features and the hours column contains 7!

len(businesses.loc[:, "attributes"].iloc[0].keys()) # 39

len(businesses.loc[:, "hours"].iloc[0].keys()) # 7Let's start by writing a simple function. Create a function called has_attributes that takes a business_id as an argument, and returns True if the business has any attributes and False otherwise. Test it with the following code:

print(has_attributes('f9NumwFMBDn751xgFiRbNA')) # True

print(has_attributes('XNoUzKckATkOD1hP6vghZg')) # False

print(has_attributes('Yzvjg0SayhoZgCljUJRF9Q')) # True

print(has_attributes('7uYJJpwORUbCirC1mz8n9Q')) # FalseWhile this is useful to get whether or not a single business has any attributes, if you wanted to apply this function to the entire attributes column/Series, you would just use the notna method:

businesses.loc[:, "attributes"].notna()Important note: Make sure your return value is of type bool. To check this:

type(True) # bool

type("True") # strRelevant topics: pandas indexing, functions

Item(s) to submit:

- Python code used to solve the problem.

- Output from running the provided "test" code.

3. Take a look at the attributes of the first business:

businesses.loc[:, "attributes"].iloc[0]What is the type of the value? Let's assume the company you work for gets data formatted like businesses each week, but your boss wants the 39 features in attributes and the 7 features in hours to become their own columns. Write a function called fix_businesses_data that accepts an argument called data_path (of type str) that is a full path to a parquet file that is in the exact same format as businesses.parquet. In addition to the data_path argument, fix_businesses_data should accept another argument called output_dir (of type str). output_dir should contain the path where you want your "fixed" parquet file to output. fix_businesses_data should return None.

The result of your function, should be a new file called new_businesses.parquet saved in the output_dir, the data in this file should no longer contain either the attributes or hours columns. Instead, each row should contain 39+7 new columns. Test your function out:

from pathlib import Path

my_username = "kamstut" # replace "kamstut" with YOUR username

fix_businesses_data(data_path="/class/datamine/data/yelp/data/parquet/businesses.parquet", output_dir=f"/scratch/scholar/{my_username}")

# see if output exists

p = Path(f"/scratch/scholar/{my_username}").glob('**/*')

files = [x for x in p if x.is_file()]

print(files)Important note: Make sure that either /scratch/scholar/{my_username} or /scratch/scholar/{my_username}/ will work as arguments to output_dir. If you use the pathlib library, as shown in the provided function "skeleton" below, both will work automatically!

from pathlib import Path

def fix_businesses_data(data_path: str, output_dir: str) -> None:

"""

fix_data accepts a parquet file that contains data in a specific format.

fix_data "explodes" the attributes and hours columns into 39+7=46 new

columns.

Args:

data_path (str): Full path to a file in the same format as businesses.parquet.

output_dir (str): Path to a directory where new_businesses.parquet should be output.

"""

# read in original parquet file

businesses = pd.read_parquet(data_path)

# unnest the attributes column

# unnest the hours column

# output new file

businesses.to_parquet(str(Path(f"{output_dir}").joinpath("new_businesses.parquet")))

return NoneHint: Check out the code below, notice how using pathlib handles whether or not we have the trailing /.

from pathlib import Path

print(Path("/class/datamine/data/").joinpath("my_file.txt"))

print(Path("/class/datamine/data").joinpath("my_file.txt"))Hint: You can test out your function on /class/datamine/data/yelp/data/parquet/businesses_sample.parquet to not waste as much time.

Hint: If we were using R and the tidyverse package, this sort of behavior is called "unnesting". You can read more about it here.

Hint: This stackoverflow post should be very useful! Specifically, run this code and take a look at the output:

businesses

businesses.loc[0:4, "attributes"].apply(pd.Series)Notice that some rows have json, and others have None:

businesses.loc[0, "attributes"] # has json

businesses.loc[2, "attributes"] # has NoneThis method allows us to handle both cases. If the row has json it converts the values, if it has None it just puts each column with a value of None.

Hint: Here is an example that shows you how to concatenate (combine) dataframes.

Relevant topics: pandas indexing, functions, concat, apply

Item(s) to submit:

- Python code used to solve the problem.

- Output from running your code.

4. That's a pretty powerful function, and could definitely be useful. What if, instead of working on just our specifically formatted parquet file, we wrote a function that worked for any pandas DataFrame? Write a function called unnest that accepts a pandas DataFrame as an argument (let's call this argument myDF), and a list of columns (let's call this argument columns), and returns a DataFrame where the provided columns are unnested.

Important note: You may write unnest so that the resulting dataframe contains the original dataframe and the unnested columns, or you may return just the unnested columns -- both will be accepted solutions.

Hint: The following should work:

businesses = pd.read_parquet("/class/datamine/data/yelp/data/parquet/businesses.parquet")

new_businesses_df = unnest(businesses, ["attributes", ])

new_businesses_df.shape # (209393, 39)

new_businesses_df.head()

new_businesses_df = unnest(businesses, ["attributes", "hours"])

new_businesses_df.shape # (209393, 46)

new_businesses_df.head()Relevant topics: pandas indexing, functions, apply, drop

Item(s) to submit:

- Python code used to solve the problem.

- Output from running the provided code.

5. Try out the code below. If a provided column isn't already nested, the column name is ruined and the data is changed. If the column doesn't already exist, a KeyError is thrown. Modify our function from question (4) to skip unnesting if the column doesn't exist. In addition, modify the function from question (4) to skip the column if the column isn't nested. Let's consider a column nested if the value of the column is a dict, and not nested otherwise.

businesses = pd.read_parquet("/class/datamine/data/yelp/data/parquet/businesses.parquet")

new_businesses_df = unnest(businesses, ["doesntexist",]) # KeyError

new_businesses_df = unnest(businesses, ["postal_code",]) # not nestedTo test your code, run the following. The result should be a DataFrame where attributes has been unnested, and that is it.

businesses = pd.read_parquet("/class/datamine/data/yelp/data/parquet/businesses.parquet")

results = unnest(businesses, ["doesntexist", "postal_code", "attributes"])

results.shape # (209393, 39)

results.head()Hint: To see if a variable is a dict you could use type:

my_variable = {'key': 'value'}

type(my_variable)## <class 'dict'>Relevant topics: pandas indexing, functions, apply, drop

Item(s) to submit:

- Python code used to solve the problem.

- Output from running the provided code.

Project 8

Motivation: A key component to writing efficient code is writing functions. Functions allow us to repeat and reuse coding steps that we used previously, over and over again. If you find you are repeating code over and over, a function may be a good way to reduce lots of lines of code. There are some pretty powerful features of functions that we have yet to explore that aren't necessarily present in R. In this project we will continue to learn about and harness the power of functions to solve data-driven problems.

Context: We are taking a small hiatus from our pandas and numpy focused series to learn about and write our own functions in Python!

Scope: python, functions, pandas

Learning objectives:

- Comprehend what a function is, and the components of a function in Python.

- Differentiate between positional and keyword arguments.

- Learn about packing and unpacking variables and arguments.

Make sure to read about, and use the template found here, and the important information about projects submissions here.

Dataset

The following questions will use the dataset found in Scholar:

/class/datamine/data/yelp/data/parquet

Questions

1. The company you work for is assigning you to the task of building out some functions for the new API they've built. Please load these two pandas DataFrame's:

users = pd.read_parquet("/class/datamine/data/yelp/data/parquet/users.parquet")

reviews = pd.read_parquet("/class/datamine/data/yelp/data/parquet/reviews.parquet")You do not need these four DataFrames in this project.

photos = pd.read_parquet("/class/datamine/data/yelp/data/parquet/photos.parquet")

businesses = pd.read_parquet("/class/datamine/data/yelp/data/parquet/businesses.parquet")

checkins = pd.read_parquet("/class/datamine/data/yelp/data/parquet/checkins.parquet")

tips = pd.read_parquet("/class/datamine/data/yelp/data/parquet/tips.parquet")You would expect that friends may have a similar taste in restaurants or businesses. Write a function called get_friends_data that accepts a user_id as an argument, and returns a pandas DataFrame with the information in the users DataFrame for each friend of user_id. Look at the solutions from the previous project, as well as this page. Add type hints for your function. You should have a type hint for our argument, user_id, as well as a type hint for the returned data. In addition to type hints, make sure to document your function with a docstring.

Hint: Every function in the solutions for last week's projects has a docstring. You can use this as a reference.

Hint: You should get the same number of friends for the following code:

print(get_friends_data("ntlvfPzc8eglqvk92iDIAw").shape) # (13,22)

print(get_friends_data("AY-laIws3S7YXNl_f_D6rQ").shape) # (1, 22)

print(get_friends_data("xvu8G900tezTzbbfqmTKvA").shape) # (193,22)Note: It is sufficient to just load the first of these three examples, when you Knit your project (to save time during Knitting).

Relevant topics: pandas indexing, functions

Item(s) to submit:

- Python code used to solve the problem.

- Output from running your code.

2. Write a function called calculate_avg_business_stars that accepts a business_id and returns the average number of stars that business received in reviews. Like in question (1) make sure to add type hints and docstrings. In addition, add comments when (and if) necessary.

There is a really cool method that gives us the same "powers" that tapply gives us in R. Use the groupby method from pandas to calculate the average stars for all businesses. Index the result to confirm that your calculate_avg_business_stars function worked properly.

Hint: You should get the same average number of start value for the following code:

print(calculate_avg_business_stars("f9NumwFMBDn751xgFiRbNA")) # 3.1025641025641026Relevant topics: pandas indexing, functions, groupby, numpy.mean()

Item(s) to submit:

- Python code used to solve the problem.

- Output from running your code.

3. Write a function called visualize_stars_over_time that accepts a business_id and returns a line plot that shows the average number of stars for each year the business has review data. Like in previous questions, make sure to add type hints and docstrings. In addition, add comments when (and if) necessary. You can test your function with some of these:

visualize_stars_over_time('RESDUcs7fIiihp38-d6_6g')Relevant topics: pandas indexing, functions, matplotlib lineplot

Item(s) to submit:

- Python code used to solve the problem.

- Output from running your code.

4. Modify question (3), and add an argument called granularity that dictates whether the plot will show the average rating over years, or months. granularity should accept one of two strings: "years", or "months". By default, if granularity isn't specified, it should be "years".

visualize_stars_over_time('RESDUcs7fIiihp38-d6_6g', "months")Relevant topics: pandas indexing, functions, matplotlib lineplot

Item(s) to submit:

- Python code used to solve the problem.

- Output from running your code.

5. Modify question (4) to accept multiple business_id's, and create a line for each id. Each of the following should work:

visualize_stars_over_time("RESDUcs7fIiihp38-d6_6g", "4JNXUYY8wbaaDmk3BPzlWw", "months")

visualize_stars_over_time("RESDUcs7fIiihp38-d6_6g", "4JNXUYY8wbaaDmk3BPzlWw", "K7lWdNUhCbcnEvI0NhGewg", "months")